This week, thousands of TikTokusers were driven into an apocalyptic frenzy asonline platforms are abuzz with viral forecasts of the ‘Rapture’.

Nevertheless, it is somewhat embarrassing for the preachers who foretold it, as the anticipated End of Days has now passed without any occurrence.

Currently, specialists have disclosed what the end times will actually resemble.

And the grim truth of human extinction is much more sorrowful than any tale of biblical destruction.

From the perilous danger of unrulyAI or nuclear warThe growing threat of man-made biological weapons poses a significant danger, but humans are also responsible for the greatest risks to their own existence.

Dr. Thomas Moynihan, a scientist atCambridge UniversityThe Centre for the Study of Existential Risk stated to the Daily Mail: ‘Apocalypse is an ancient concept, originating from religious beliefs, but extinction is a relatively new idea, based on scientific understanding of the natural world.’

When discussing extinction, we envision the human race vanishing while the rest of the universe continues to exist endlessly, in its immense scale, without our presence.

This is quite unlike what Christians envision when they discuss the Rapture or the Day of Judgment.

Nuclear war

Researchers examining the potential annihilation of humankind refer to what they term ‘existential dangers’ – risks that have the capacity to erase the human race.

Ever since humans learned to split the atom, one of the most pressing existential risks has been nuclear war.

During the Cold War, fears of nuclear war were so high that governments around the world were seriously planning for life after the total annihilation of society.

The danger of nuclear conflict decreased following the collapse of the Soviet Union, but specialists nowconsider the danger is increasing.

Earlier this year, the Atomic Scientists’ Bulletinadvanced the Doomsday Clock one second nearer to midnight, referencing a higher likelihood of a nuclear conflict.

The nine nations that have nuclear weapons collectively own 12,331 warheads, with Russia alonecarrying enough explosives to obliterate 7 percent of urban areas across the globe.

Nevertheless, the alarming possibility remains that human civilization could be eradicated by just a small portion of these weapons.

Dr. Moynihan states: “Recent studies indicate that even a more localized nuclear conflict could result in global climatic consequences.”

Debris from urban fires could rise into the stratosphere, where it would reduce sunlight, leading to agricultural failures.

A comparable event contributed to the extinction of the dinosaurs, although this was triggered by an asteroid impact.

Research indicates that a so-called ‘nuclear winter’ would likely be significantly more severe than what was previously estimated during the Cold War era.

Using modern climate models, researchers have shown that a nuclear exchange would plunge the planet into a ‘nuclear little ice age’ lasting thousands of years.

Reduced sunlight would plunge global temperatures by up to 10˚C (18˚F) for nearly a decade, devastating the world’s agricultural production.

Modeling indicates that a minor nuclear conflict between India and Pakistanwould leave 2.5 billion people without food for a minimum of two years.

In the meantime, a worldwide nuclear conflict would result in the immediate death of 360 million civilians and cause the starvation of 5.3 billion individuals within two years after the initial detonation.

Dr. Moynihan states: “Some claim it’s difficult to establish a definitive connection from this to the complete extinction of all humans globally, but we don’t wish to discover the truth.”

Engineered bioweapons

Similar to the danger posed by nuclear weapons, another potential scenario for humanity’s extinction could involve the deployment of a man-made biological weapon.

Since 1973, following the creation of the first genetically modified bacteria by scientists, human capability to develop lethal diseases has been continuously growing.

Human-created illnesses present a far more serious danger to our survival than any natural occurrences.

Otto Barten, the creator of the Existential Risk Observatory, stated to Daily Mail: ‘We have considerable experience with natural pandemics, and none have resulted in human extinction over the past 300,000 years.’

Thus, even though natural pandemics pose a significant threat, it is highly improbable that they will lead to our total extinction.

Nevertheless, human-created pandemics could be designed to enhance their impact, something that does not happen naturally.

Currently, the means to create such deadly diseases are limited to a handful of states that wouldn’t benefit from unleashing a deadly plague.

Nevertheless, researchers have cautioned that advancing technologies such as AI suggest that this capability is expected to become accessible to an increasing number of individuals.

Should terrorists acquire the capability to develop lethal bioweapons, they might introduce a pathogen that could rapidly spread beyond control, ultimately resulting in the extinction of the human race.

What would remain would be a world that appears as it is today, yet devoid of any signs of human life.

Dr. Moynihan remarks, “Extinction represents, in this sense, the complete breakdown of any moral structure; once more, within a universe that continues, quietly, without us.”

Rogue artificial intelligence

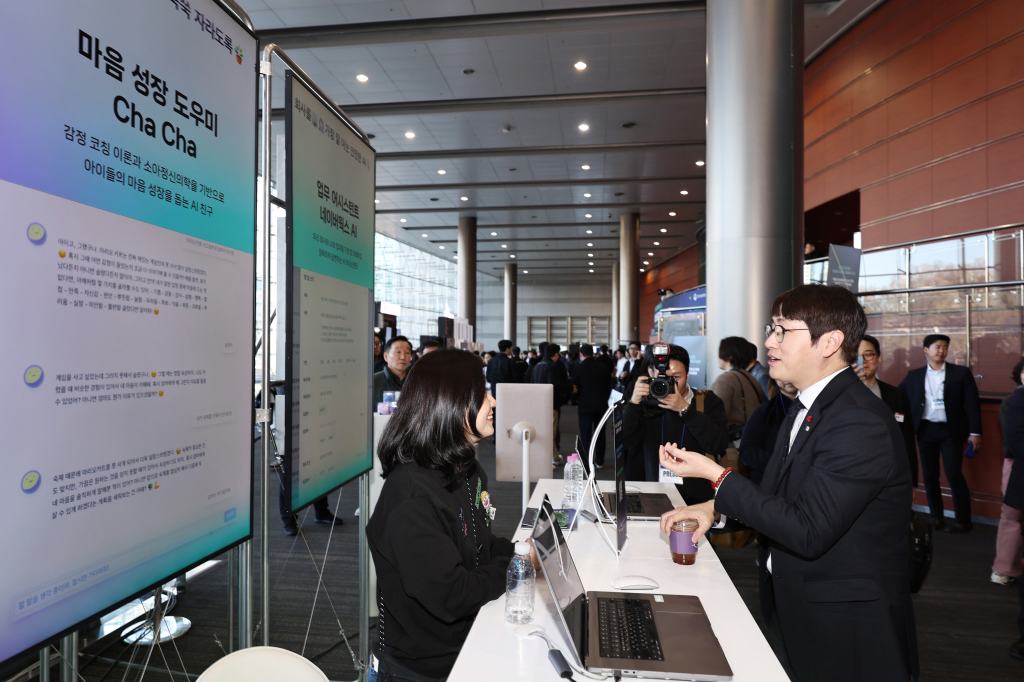

Many specialists today think the greatest threat that humans are generating for themselves is artificial intelligence.

Experts examining existential threats believe there is a probability ranging from 10 to 90 percent that humans may not endure the arrival of superintelligent artificial intelligence.

The big concern is that a sufficiently intelligent AI will become ‘unaligned’, meaning its goals and ambitions will cease to line up with the interests of humanity.

Dr Moynihan says: ‘If an AI becomes smarter than us and also becomes agential — that is, capable of conjuring its own goals and acting on them.’

If an AI gains autonomy, it might still eliminate humanity without actively showing hostility.

If an AI with autonomous capabilities has an objective that conflicts with human desires, it would inherently perceive humans attempting to shut it down as an obstacle to its goal and would take all possible measures to stop that from happening.

The AI could be completely unconcerned about humans, yet choose to redirect the resources and systems that sustain human life toward advancing its own goals.

Scientists are uncertain about what those objectives could be or the methods an AI might use to achieve them, which is precisely why a misaligned AI poses such a significant risk.

“The issue is that you can’t forecast the behavior of a being vastly more intelligent than yourself,” states Dr. Moynihan.

It’s challenging to envision how we might predict, stop, or hinder the AI’s strategies for execution.

Another major concern is that specialists are uncertain about the specific methods an AI could use to eliminate humans.

Certain professionals have proposed that an artificial intelligence could gain control over current military systems or nuclear missiles, influence people to follow its commands, or develop its own biological weapons.

Nevertheless, the more alarming possibility is that AI could eliminate us in a manner we are utterly unable to imagine.

Dr. Moynihan states: “The common concern is that an AI more intelligent than humans would have the capability to control matter and energy with much greater precision than we are able to achieve.”

‘Drone strikes would have been incomprehensible to the earliest human farmers: the laws of physics haven’t changed in the meantime, just our comprehension of them.

‘Regardless, if something like this is possible, and ever does come to pass, it would probably unfold in ways far stranger than anyone currently imagines. It won’t involve metallic, humanoid robots with guns and glowing scarlet eyes.’

Climate change

Mr Barten says: ‘Climate change is also an existential risk, meaning it could lead to the complete annihilation of humanity, but experts believe this has less than a one in a thousand chance of happening.

Nevertheless, there are some improbable situations where climate change might result in the end of humanity.

For instance, should the planet heat up sufficiently, significant quantities of water vapor might rise into the upper atmosphere, creating a process referred to as the moist greenhouse effect.

There, powerful solar radiation would split the water into oxygen and hydrogen, which is light enough to readily escape into space.

At the same time, moisture in the air would reduce the processes that typically stop gases from leaking out.

This could result in a self-perpetuating cycle where all the water on Earth is lost to space, rendering the planet dry and completely uninhabitable.

The positive aspect is that, even though global warming is causing temperatures to rise, the moist greenhouse effect will only occur if the climate becomes significantly warmer than what current scientific projections indicate.

The bad news is that the moist greenhouse effect will almost certainly occur in about 1.5 billion years when the sun starts to expand.

- Are we on the brink of an AI-induced apocalypse, with technology potentially triggering global collapse and human extinction?

- Can artificial intelligence eliminate human illnesses and the climate crisis, or does it pose a potential major danger?

- Is humanity facing a potential disaster caused by intense space weather, with specialists cautioning about a doomsday-level storm?

- What might lead humanity into an unexampled threat as specialists adjust the Doomsday Clock?

- Is the worldwide competition in artificial intelligence a dangerous race that could lead to catastrophe, raising concerns about the end of humanity as stated by leading specialists?

Leave a comment