Sexual deepfakes—explicit images and videos generated through the misuse of generative artificial intelligence (AI)—are proliferating quickly in Japan. These represent a significant violation of human rights, and urgent measures must be taken to address this issue.

In October, a male employee of a company was detained on charges of distributing indecent electromagnetic data following allegations that he generated explicit images featuring female celebrities through artificial intelligence and subsequently sold them.

Deepfakes typically refer to technology that generates artificial videos using the visual or vocal elements of actual individuals. In contrast to earlier fake videos, which demanded intricate procedures, these videos can now be produced easily by entering images and written commands.

Numerous instances have been reported where images of women, taken from social media, graduation albums, school event photographs, and other sources, were used without permission to create composite images featuring nude bodies, which were then shared online.

If actual photographs are utilized, the offense of defamation outlined in the Penal Code may be applicable. If the victim is younger than 18 years old, this could also violate the Act on the Punishment of Activities Related to Child Prostitution and Child Pornography, which forbids the production and possession of child pornography.

Nevertheless, when AI is employed to create images that only look like a specific individual, it remains uncertain if this constitutes defamation. Japan’s legislation prohibiting child prostitution and child pornography targets actual children, leading to a legal ambiguity regarding whether images that have every feature changed except for the face can be considered “real.”

No all-encompassing law exists to govern the use of sexual deepfakes. The government has expressed its plan to handle the issue through current laws, although it is expected that some situations may not be fully covered by existing regulations.

At the same time, certain local authorities have implemented additional measures. The Tottori Prefectural Government passed an ordinance in August aimed at controlling sexual deepfakes. According to the ordinance, individuals who produce child pornography may have their names made public if they fail to comply with requests to remove the images, and they may also face fines. The prefectural government has also urged the national government to enhance efforts in combating such content.

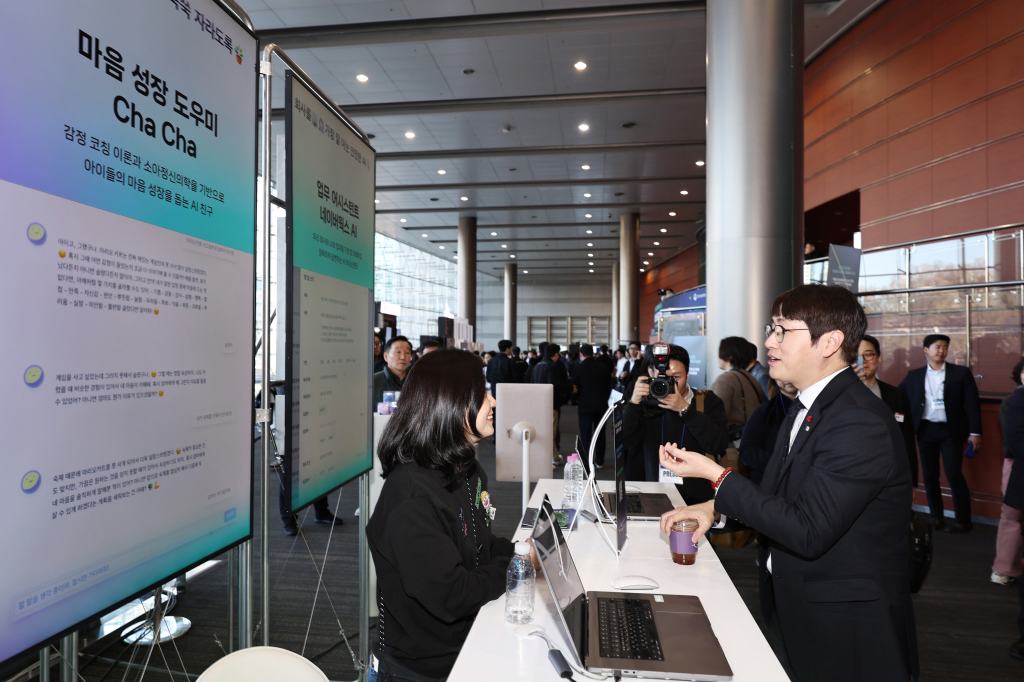

Technical solutions are also under investigation. The National Institute of Informatics is working on a “vaccine” that integrates data into original images to stop AI-driven alterations. Social media platform companies must also implement protective measures.

As anyone possessing a smartphone can readily generate such material, efforts to educate and raise awareness in order to prevent its abuse should also be encouraged.

The extent of the psychological wounds endured by victims cannot be measured. Every possible action should be undertaken to address the issue.

Leave a comment