In the initial assessment of the “National Representative AI,” it was found that individual benchmarks chosen by each company, along with shared benchmarks, were used as standards for evaluating AI capabilities. In contrast to Upstage, SK Telecom, NC AI, and LG AI Research—entities that created large language models (LLMs)—Naver Cloud built an omni-modal model that can interpret images and videos.

In the AI sector, there are concerns regarding whether “LLMs and multi-modal models can be equitably compared on a common scale.” Others also wonder if “combining scores from various benchmarks is consistent with the project’s aim of choosing an independent AI foundation model, particularly since Naver incorporated Chinese-made components.”

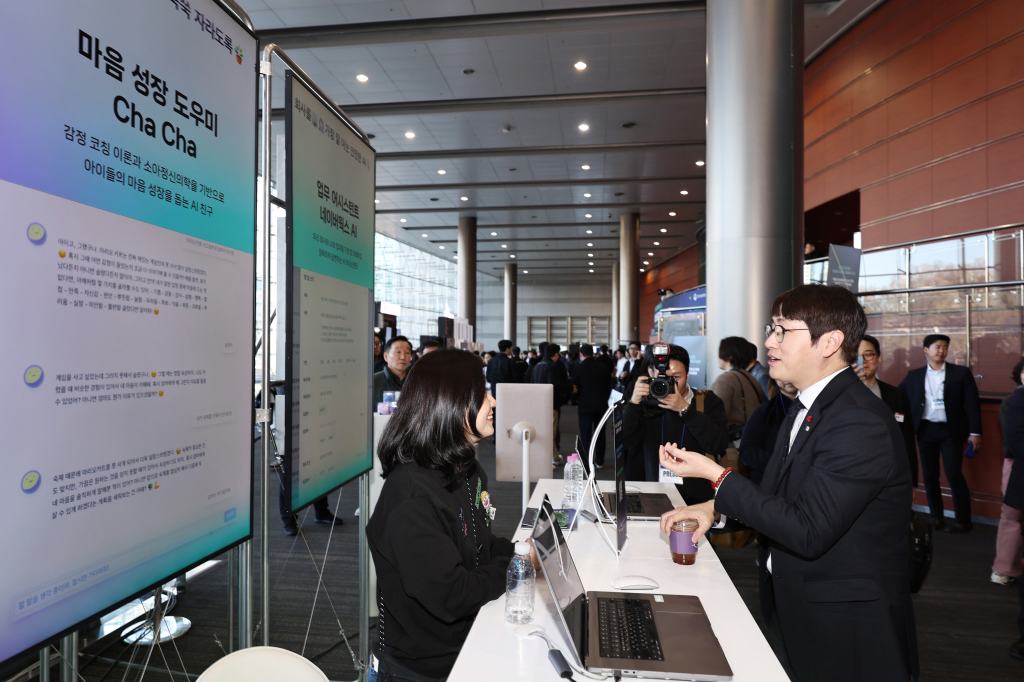

A group of specialist reviewers, established by the Ministry of Science and ICT, started assessing the AI foundational models provided by the five National Representative AI teams at the beginning of this month. In order to evaluate the performance of AI models, standardized evaluation criteria (benchmarks) are necessary for objective measurement and comparison. It is reported that the Ministry of Science and ICT and the National Representative AI team had several discussions regarding the selection of benchmarks.

However, in contrast to the other four companies, Naver Cloud’s model—which is capable of recognizing images and videos—sparked debate during the selection of benchmarks. Naver claimed that using a single standard to assess LLMs and multi-modal models was unfair. In the end, the National Representative AI team and the Ministry of Science and ICT reportedly decided to include two custom-selected benchmarks for each company, along with the shared benchmarks. This implies that each company’s performance will be judged based on their own chosen “examination papers.”

Some critics claim that aggregating scores from separate benchmarks is unjust, especially since Naver incorporated Qwen’s “Qwen2.5 ViT” as its primary module, the visual encoder. The visual encoder serves as the “eyes” of a multi-modal model, transforming external data (images, videos) into digital signals for AI comprehension. AI specialists suggest that Naver utilized Qwen’s pre-trained weights when implementing its visual encoder. Initially, the government expressed its aim to “create AI foundation models independently to ensure self-reliant AI.”

Naver is said to have chosen “Text VQA” and “DocVQA” as its separate benchmarks. The first assesses the capability to read text within images (such as signs or t-shirts) and provide answers to questions, whereas the second evaluates the ability to visually interpret documents, comprehend their content, and respond to inquiries. Both require the functionality of a vision encoder. An official from the AI industry stated, “The core objective of the National Representative AI project is to assess the performance of independent AI foundation models, but Naver’s evaluation heavily depends on Qwen’s vision encoder. Although Naver claims to have its own vision encoder, it remains unclear why they opted for a Chinese-developed module, which led to this controversy.”

Leave a comment